The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM), is strengthening its contribution to climate risk management through international collaboration with FINCAPES (Flood Impacts, Carbon Pricing, and Ecosystem Sustainability). FINCAPES is a gender-responsive initiative led by the University of Waterloo and supported by funding from the Government of Canada through Canada’s Indo-Pacific Strategy, with a focus on strengthening flood resilience, promoting nature-based solutions, and enhancing climate policy, including carbon financing. ...

Category: Article

Department of Mathematics, FMIPA UGM and FINCAPES Hold Technical Guidance for Flood Loss Survey Enumerators in Pontianak City

Pontianak, 16 January 2026 — The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM), in collaboration with Universitas Tanjungpura (UNTAN), conducted a Technical Guidance (BimTek) for Enumerators of the Pontianak City Flood Loss Survey, as part of the series of activities under the FINCAPES Project (Financial Cooperation for Climate and Environmental Sustainability). ...

Ahead of Final Exams, METRIK Room Filled with Intense Discussions and Growing Student Enthusiasm

A different atmosphere could be felt in the undergraduate building of FMIPA UGM during the 14th week of lectures. As the Odd Semester Final Examinations (UAS) 2025/2026 approach, the METRIK Room has recorded a significant surge in consultation activity. As an academic support facility for undergraduate students, METRIK serves as a space for discussions, questions, and resolving lingering academic issues. Students from various cohorts come and go—some carrying stacks of notes, others taking deep breaths before conveying their academic concerns. All are racing to solidify their understanding before the exams, which are just days away. ...

A different atmosphere could be felt in the undergraduate building of FMIPA UGM during the 14th week of lectures. As the Odd Semester Final Examinations (UAS) 2025/2026 approach, the METRIK Room has recorded a significant surge in consultation activity. As an academic support facility for undergraduate students, METRIK serves as a space for discussions, questions, and resolving lingering academic issues. Students from various cohorts come and go—some carrying stacks of notes, others taking deep breaths before conveying their academic concerns. All are racing to solidify their understanding before the exams, which are just days away. ...

UGM Achieves Outstanding Results at Satria Data 2025

Universitas Gadjah Mada (UGM), through its four representative teams, once again achieved remarkable success at the 2025 Statistika Ria and Festival Sains Data (Satria Data). The final round of the competition was held at the Islamic University of Indonesia, Yogyakarta, on 3–6 November 2025. Satria Data is a national competition organized by the Directorate of Learning and Student Affairs, Directorate General of Higher Education of the Ministry of Higher Education, Science, and Technology, as an effort to enhance students’ competencies in statistics and data science—fields that are increasingly relevant in the digital era. ...

Universitas Gadjah Mada (UGM), through its four representative teams, once again achieved remarkable success at the 2025 Statistika Ria and Festival Sains Data (Satria Data). The final round of the competition was held at the Islamic University of Indonesia, Yogyakarta, on 3–6 November 2025. Satria Data is a national competition organized by the Directorate of Learning and Student Affairs, Directorate General of Higher Education of the Ministry of Higher Education, Science, and Technology, as an effort to enhance students’ competencies in statistics and data science—fields that are increasingly relevant in the digital era. ...

Innovative! UGM’s Gen-Z Berkah Team Wins 3rd Place in ASIC ARSEN 2025 with Their Earthquake-Resilient City Concept through the SERBU Framework

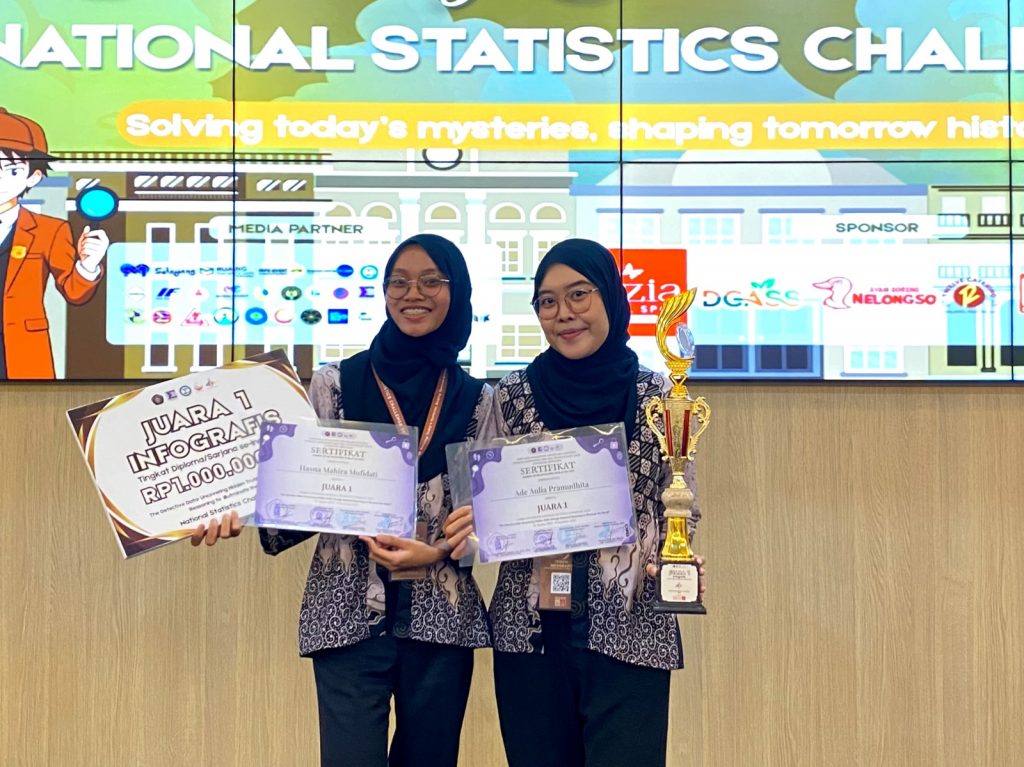

INFOMATE Team Wins First Place in the Infographics Competition at the National Statistics Challenge 2025

Statistics students from Universitas Gadjah Mada (UGM) have once again achieved an outstanding national accomplishment. At the Final Round of the National Statistics Challenge (NSC) 2025, held by Studio Statistika, Universitas Brawijaya, on 2 November 2025 in Malang, the INFOMATE team secured First Place in the Infographics category. The team consists of two students, Hasna Mahira Mufidati and Ade Aulia Pramudhita. ...

Statistics students from Universitas Gadjah Mada (UGM) have once again achieved an outstanding national accomplishment. At the Final Round of the National Statistics Challenge (NSC) 2025, held by Studio Statistika, Universitas Brawijaya, on 2 November 2025 in Malang, the INFOMATE team secured First Place in the Infographics category. The team consists of two students, Hasna Mahira Mufidati and Ade Aulia Pramudhita. ...

Our Victory Story at ASEC 2025: Transforming Data into Real Solutions for Jabodetabek

Surabaya, November 1, 2025 – After going through a long and challenging competition process, our team succeeded in winning 1st Place in the Airlangga Statistics Essay Competition (ASEC) 2025. This victory is not only a personal achievement but also a validation of our belief that statistical science is key to solving complex urban problems. ...

Surabaya, November 1, 2025 – After going through a long and challenging competition process, our team succeeded in winning 1st Place in the Airlangga Statistics Essay Competition (ASEC) 2025. This victory is not only a personal achievement but also a validation of our belief that statistical science is key to solving complex urban problems. ...

UGM Mathematics Department Students Win 1st Place at the National Airlangga Statistics Event 2025

Students from the Department of Mathematics, Faculty of Mathematics and Natural Sciences, Universitas Gadjah Mada, have once again achieved an outstanding accomplishment at the national level. In the Airlangga Statistics Event (ASE) 2025, the Starlite team—consisting of Ade Aulia Pramudhita and Gabriela Anastasia Putri Sugiarto from the Undergraduate Statistics Program—successfully secured 1st Place, outperforming participants from various universities across Indonesia. ...

Students from the Department of Mathematics, Faculty of Mathematics and Natural Sciences, Universitas Gadjah Mada, have once again achieved an outstanding accomplishment at the national level. In the Airlangga Statistics Event (ASE) 2025, the Starlite team—consisting of Ade Aulia Pramudhita and Gabriela Anastasia Putri Sugiarto from the Undergraduate Statistics Program—successfully secured 1st Place, outperforming participants from various universities across Indonesia. ...

UGM Department of Mathematics, Universitas Alma Ata, and RMI PBNU Collaborate to Address Santri Numeracy Gaps through the “Inclusive Mathematics” Forum

YOGYAKARTA, 15 November 2025 — The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM) held the Pesantren Mathematics Teachers’ Forum themed “Inclusive Mathematics” on 15 November 2025. This program is a collaboration between the Department of Mathematics UGM, the Mathematics Education Study Program of Universitas Alma Ata (UAA) Yogyakarta, and Rabithah Ma’ahid Islamiyah (RMI) PBNU, aimed at strengthening teachers’ capacity in managing diverse levels of mathematical understanding among students and enhancing numeracy literacy within Islamic boarding schools (pesantren). ...

YOGYAKARTA, 15 November 2025 — The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM) held the Pesantren Mathematics Teachers’ Forum themed “Inclusive Mathematics” on 15 November 2025. This program is a collaboration between the Department of Mathematics UGM, the Mathematics Education Study Program of Universitas Alma Ata (UAA) Yogyakarta, and Rabithah Ma’ahid Islamiyah (RMI) PBNU, aimed at strengthening teachers’ capacity in managing diverse levels of mathematical understanding among students and enhancing numeracy literacy within Islamic boarding schools (pesantren). ...

Department of Mathematics, FMIPA UGM Holds Community Service Program on Mathematical Applications and Introduction to Artificial Intelligence

Purbalingga – The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM), organized a community service program in Purbalingga Regency in the form of a seminar and training entitled “Enhancing the Competence of MGMP Mathematics Teachers of SMA/MA in Purbalingga Regency through Training on Mathematical Applications for Learning Data Processing.” The activity was held on September 1, 2025 at SMA Negeri 1 Bobotsari, Purbalingga, and was attended by mathematics teachers who are members of the Mathematics Subject Teacher Working Group (MGMP) of SMA/MA throughout Purbalingga. This program is part of the Department of Mathematics’ ongoing efforts to strengthen the capacity of educators in utilizing technology and mathematical applications to support classroom learning. ...