Marking the first week of the Even Semester of the 2025/2026 Academic Year, undergraduate students of Mathematics, Statistics, and Actuarial Science from the Class of 2025 participated in theRESET & RISE: Turning Semester 1 Lessons into a Strong First Year and After, held at the 7th Floor Auditorium of the S1 Building, Faculty of Mathematics and Natural Sciences, Universitas Gadjah Mada, on Saturday (14/2). ...

Tag: SDG 4: PENDIDIKAN BERMUTU

Strengthening Research Competitiveness, Demomatika#16 Discusses Competitive Proposal Strategies with Dr. Paolo Giordano from University of Vienna

Demomatika#16 once again presented an academic forum focused on strengthening the research capacity of postgraduate students. Carrying the theme “From Idea to Approval: Strategies for Winning Research Proposals,” the event was held on Monday, 9 February 2026, from 09.30 to 12.00 WIB at the RMJT Soehakso Auditorium, FMIPA. ...

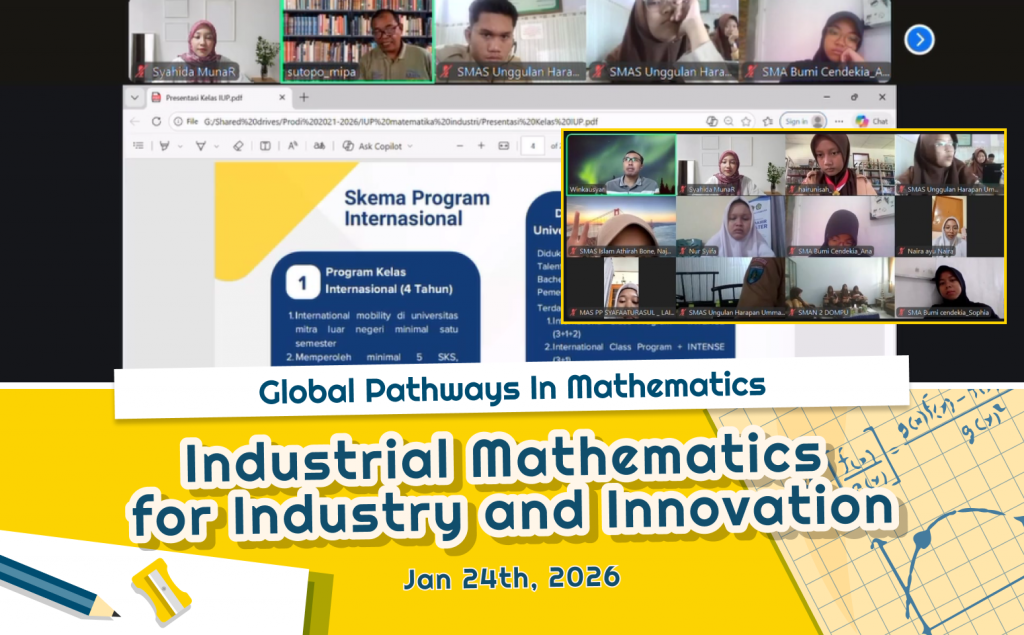

Strong Interest in International Programs Marks Global Pathways Webinar at the Department of Mathematics FMIPA UGM

The Department of Mathematics FMIPA UGM, once again held a socialization event for its international programs through a webinar entitled “Global Pathways in Mathematics and Statistics” on Saturday (24/1). Conducted online via Zoom Meeting from 09:00 to 12:00 WIB, the event was attended by hundreds of participants from diverse backgrounds. ...

Farewell and Welcome Ceremony for Department and Study Program Management

The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM), held a farewell and welcome ceremony for the department and study program management on Monday (12 January 2025) at Meeting Room I, 3rd Floor. The event was attended by all academic staff of the Department of Mathematics FMIPA UGM. ...

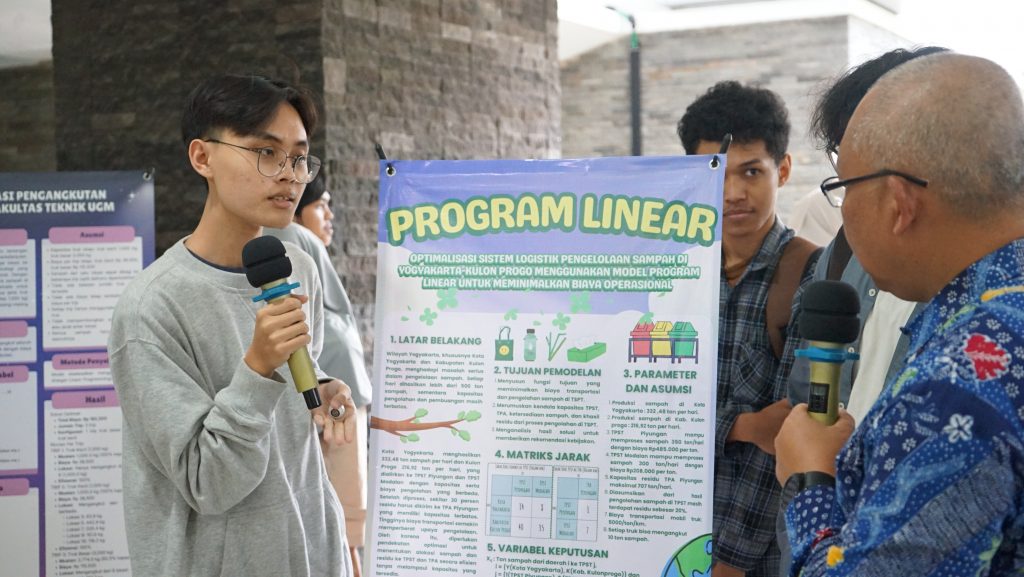

Mathematics PBL Poster Exhibition Showcases Waste Management Solutions from Campus to Community

The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM), held a Poster Exhibition showcasing the outcomes of Problem Based Learning (PBL) integrating three courses through waste management case studies at the corridor of Lecture Building C, FMIPA UGM, from 4 to 19 December 2025. The exhibition was officially opened on Wednesday (10/11) at 3:30 p.m. by the Dean of FMIPA UGM, Prof. Dr. Eng. Kuwat Triyana, M.Si. ...

Ahead of Final Exams, METRIK Room Filled with Intense Discussions and Growing Student Enthusiasm

A different atmosphere could be felt in the undergraduate building of FMIPA UGM during the 14th week of lectures. As the Odd Semester Final Examinations (UAS) 2025/2026 approach, the METRIK Room has recorded a significant surge in consultation activity. As an academic support facility for undergraduate students, METRIK serves as a space for discussions, questions, and resolving lingering academic issues. Students from various cohorts come and go—some carrying stacks of notes, others taking deep breaths before conveying their academic concerns. All are racing to solidify their understanding before the exams, which are just days away. ...

A different atmosphere could be felt in the undergraduate building of FMIPA UGM during the 14th week of lectures. As the Odd Semester Final Examinations (UAS) 2025/2026 approach, the METRIK Room has recorded a significant surge in consultation activity. As an academic support facility for undergraduate students, METRIK serves as a space for discussions, questions, and resolving lingering academic issues. Students from various cohorts come and go—some carrying stacks of notes, others taking deep breaths before conveying their academic concerns. All are racing to solidify their understanding before the exams, which are just days away. ...

UGM Achieves Outstanding Results at Satria Data 2025

Universitas Gadjah Mada (UGM), through its four representative teams, once again achieved remarkable success at the 2025 Statistika Ria and Festival Sains Data (Satria Data). The final round of the competition was held at the Islamic University of Indonesia, Yogyakarta, on 3–6 November 2025. Satria Data is a national competition organized by the Directorate of Learning and Student Affairs, Directorate General of Higher Education of the Ministry of Higher Education, Science, and Technology, as an effort to enhance students’ competencies in statistics and data science—fields that are increasingly relevant in the digital era. ...

Universitas Gadjah Mada (UGM), through its four representative teams, once again achieved remarkable success at the 2025 Statistika Ria and Festival Sains Data (Satria Data). The final round of the competition was held at the Islamic University of Indonesia, Yogyakarta, on 3–6 November 2025. Satria Data is a national competition organized by the Directorate of Learning and Student Affairs, Directorate General of Higher Education of the Ministry of Higher Education, Science, and Technology, as an effort to enhance students’ competencies in statistics and data science—fields that are increasingly relevant in the digital era. ...

UGM Wins Overall Champion Title at ONMIPA 2025, Secures Three Gold Medals and One Bronze in Mathematics

Universitas Gadjah Mada (UGM) once again achieved an outstanding accomplishment at the 2025 National Mathematics and Natural Sciences Olympiad for Higher Education (ONMIPA-PT), held at Universitas Padjadjaran on 16–20 November 2025. At this national-level competition, UGM won the Overall Champion title by securing 6 gold medals, 1 silver, 6 bronze, and 1 honorable mention across four fields: Physics, Chemistry, Biology, and Mathematics. ...

Universitas Gadjah Mada (UGM) once again achieved an outstanding accomplishment at the 2025 National Mathematics and Natural Sciences Olympiad for Higher Education (ONMIPA-PT), held at Universitas Padjadjaran on 16–20 November 2025. At this national-level competition, UGM won the Overall Champion title by securing 6 gold medals, 1 silver, 6 bronze, and 1 honorable mention across four fields: Physics, Chemistry, Biology, and Mathematics. ...

Department of Mathematics Holds Mini Course on Stochastic Operations Research with Prof. Richard from the University of Twente

The Department of Mathematics, FMIPA UGM, once again organized a Mini Course entitled “Stochastic Operations Research and Its Application in Healthcare Logistics” on 18–20 November 2025 at Meeting Room 1, 3rd Floor, Department of Mathematics. The program featured Prof. Dr. Richard Boucherie, Professor of Stochastic Operations Research from the University of Twente, the Netherlands, as the main speaker. ...

UGM Department of Mathematics, Universitas Alma Ata, and RMI PBNU Collaborate to Address Santri Numeracy Gaps through the “Inclusive Mathematics” Forum

YOGYAKARTA, 15 November 2025 — The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM) held the Pesantren Mathematics Teachers’ Forum themed “Inclusive Mathematics” on 15 November 2025. This program is a collaboration between the Department of Mathematics UGM, the Mathematics Education Study Program of Universitas Alma Ata (UAA) Yogyakarta, and Rabithah Ma’ahid Islamiyah (RMI) PBNU, aimed at strengthening teachers’ capacity in managing diverse levels of mathematical understanding among students and enhancing numeracy literacy within Islamic boarding schools (pesantren). ...

YOGYAKARTA, 15 November 2025 — The Department of Mathematics, Faculty of Mathematics and Natural Sciences (FMIPA), Universitas Gadjah Mada (UGM) held the Pesantren Mathematics Teachers’ Forum themed “Inclusive Mathematics” on 15 November 2025. This program is a collaboration between the Department of Mathematics UGM, the Mathematics Education Study Program of Universitas Alma Ata (UAA) Yogyakarta, and Rabithah Ma’ahid Islamiyah (RMI) PBNU, aimed at strengthening teachers’ capacity in managing diverse levels of mathematical understanding among students and enhancing numeracy literacy within Islamic boarding schools (pesantren). ...