In today’s digital era, we live in a world filled with data. Every second, new information is transmitted, received, and analyzed by devices across the globe. But how do we measure this information? Does all information hold the same value, or is there a way to quantify its worth? This is where the theory of information entropy plays a crucial role.

In today’s digital era, we live in a world filled with data. Every second, new information is transmitted, received, and analyzed by devices across the globe. But how do we measure this information? Does all information hold the same value, or is there a way to quantify its worth? This is where the theory of information entropy plays a crucial role.

The theory of information entropy was first introduced by mathematician and engineer Claude Shannon in 1948. In this context, entropy refers to the level of uncertainty or randomness in an information system. Essentially, it quantifies how much “new information” is contained in an event or symbol.

For example, consider the outcome of a coin toss. There are two possible results: heads or tails, each with an equal probability (50%). In this case, the information gained from the coin toss is high because the outcome is uncertain, with two equally likely possibilities. However, if we already know the result beforehand, no new information is gained, as the uncertainty has been eliminated.

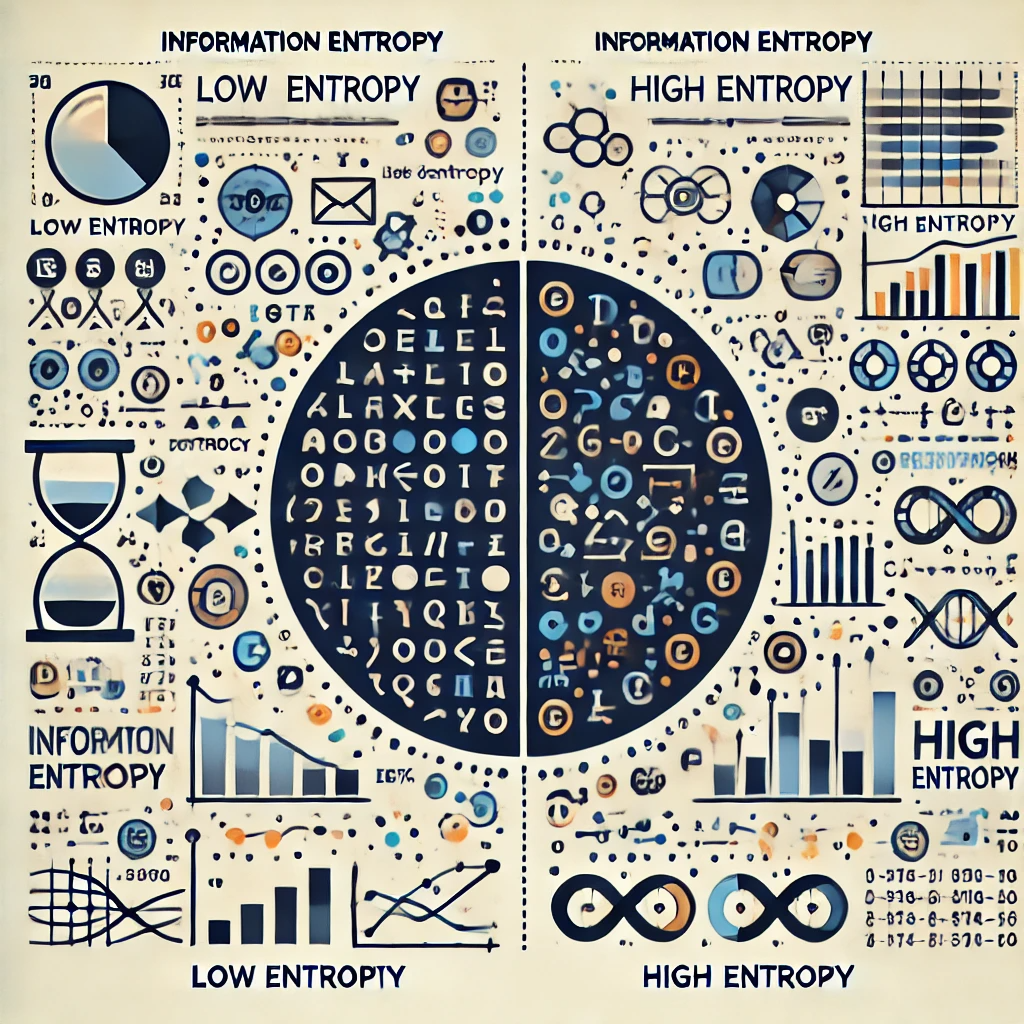

Simply put, information entropy measures how much “surprise” or uncertainty is associated with an event. The greater the uncertainty, the higher the entropy.

Entropy has significant applications in many fields, such as communication, data compression, and probability theory. In the digital world, we often aim to reduce data size for efficient storage or transmission. Entropy provides a guide for how much data can be compressed. If data has low entropy (e.g., if it is highly structured and predictable), it can be compressed effectively. Conversely, data with high entropy is harder to compress due to its randomness.

In communication systems, entropy is used to determine the number of bits required to transmit information. The higher the entropy, the more bits are needed to send a message. This concept is tied to optimal coding used in data transmission. Entropy also plays a significant role in cryptography. Effective security systems must generate keys with high entropy, as low-entropy keys are easier for hackers to predict.

Imagine sending a message composed of symbols (e.g., letters). If you repeatedly send the letter “A,” the receiver will not be surprised, as they already know what to expect. In this case, the information entropy is low. However, if you send letters randomly, the receiver will face more uncertainty or surprise with each new symbol. Here, the information entropy is higher due to the greater number of possible outcomes.

Claude Shannon defined entropy mathematically, but the fundamental concept of entropy is highly relevant in everyday life. Entropy can be observed in nearly every aspect of our world filled with uncertainty. For example, when playing games or watching sports, we tend to be more engaged or interested when the outcome is unpredictable. In such cases, uncertainty (or entropy) enhances the experience.

On the other hand, in our daily lives, we often seek patterns or order to reduce entropy. For instance, we create regular schedules or plan vacations meticulously. Knowing what to expect makes us feel more comfortable because it reduces uncertainty.

The theory of information entropy offers new insights into how we understand and manage data and information. In an increasingly connected world, where information flows rapidly and evolves constantly, the ability to measure and comprehend entropy becomes ever more critical. Whether in communication, cryptography, data compression, or even daily experiences, entropy provides a lens through which we can understand how uncertainty shapes our interactions with the digital and physical worlds.

With this theory, we can make smarter decisions, design more efficient systems, and perhaps gain a better understanding of the ever-changing world around us.

Keywords: Entropy Theory, Optimal Code, Digital.

References:

- Shannon, C. E. (1948). A Mathematical Theory of Communication. The Bell System Technical Journal, 27(3), 379–423.

- Cover, T. M., & Thomas, J. A. (2006). Elements of Information Theory (2nd ed.). Wiley-Interscience.

- MacKay, D. J. C. (2003). Information Theory, Inference, and Learning Algorithms. Cambridge University Press.

- Jaynes, E. T. (2003). Probability Theory: The Logic of Science. Cambridge University Press.

Author: Meilinda Roestiyana Dewy

Author: Meilinda Roestiyana Dewy